Artificial

Intuition

A New Possible Path To

Artificial Intelligence

by Monica Anderson

Bizarre Domains

Several disciplines such as Chaos Theory, Complexity Theory, and the new discipline of Systems Biology concern themselves with complex systems of all kinds. No matter which direction you approach from, you will soon discover a set of underlying problem types that make these fields resist analysis and modeling efforts using the established methods of conventional Science.

Depending on your discipline, you would be discussing these domains using labels

like "Impredicative Processes", "Closed Loops of Causality", or just as "Complex

Systems".

Dr. Stephen Kercel used the term "Bizarre Systems" at the ANNIE-99 conference. I

happen to prefer this term over the others and have adopted it for the purpose

of gathering the known problem types, diverse as they may be, under one short

unique label. The label avoids previosuly established definitions for words like

"complex" and "untractable". I use the term "Bizarre Domain" when discussing

problem domains involvning Bizarre Systems.

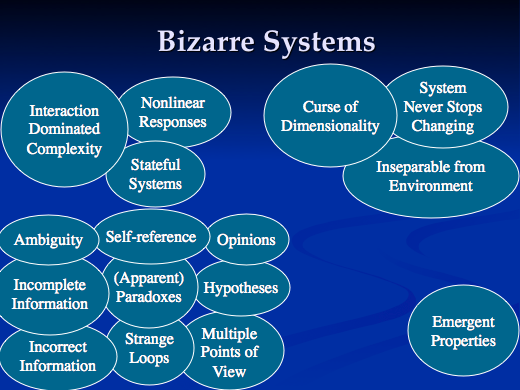

In my own analysis of problem domains that require Intelligence I have enumerated, somewhat arbitrarily, 16 problem types which I grouped into four categories. Other sources have used other groupings or none, and sometimes the problem types don't quite correspond across analyses but there is much overlap between the various sources.

The specifics of the list are surprisingly unimportant for the discussion at hand since Artificial Intuition is capable of avoiding (by side-stepping) all of them. I examine them in this much depth only to demonstrate what kinds of problems Logic-based systems are facing.

My main interest is in using Artificial Intuition to discover Semantics in text, and as a step towards an Artificial Intelligence. Therefore most of the examples below talk about building computer based models of languages and of the world. However, note that Artificial Intuition based language and world models are so different from logic-based models that some may dispute whether they are models at all. While operational and useful, they lack many of the advantages of logic based models. This is discussed in detail later.

Chaotic Systems

The behavior of Chaotic Systems cannot be reliably predicted. Specifically, the further into the future you want to make a prediction, the less reliable the prediction will be.

Chaotic Systems contain at least one (but often all three) of the following properties:

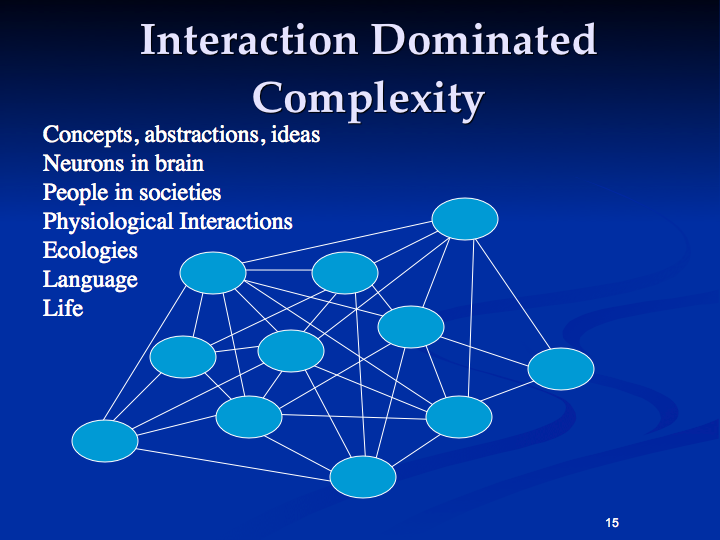

The system contains Interaction Dominated Complexity. This is

my term for systems where the main source of complexity is not the

size of the system or its components but the high degree of

interconnectedness between the components, and the large number of

components. In such systems the components can be simple and similar;

in fact, they could be nearly identical. The function of a component

is mainly determined by what other components it is connected

to. Examples of this kind of systems would be the brain, that contains

numerous but nearly identical neurons interconnected by numerous

connecting elements such as synapses or ganglions; or consider a group

of people, each irregularly interacting with many others; or the words

of a language and their sequencing into sentences. This kind of

complexity is very common in nature. Analysis of any sizable system

exhibiting this property by using Logic is practically impossible, and

for some systems, may be impossible in principle.

The system contains Interaction Dominated Complexity. This is

my term for systems where the main source of complexity is not the

size of the system or its components but the high degree of

interconnectedness between the components, and the large number of

components. In such systems the components can be simple and similar;

in fact, they could be nearly identical. The function of a component

is mainly determined by what other components it is connected

to. Examples of this kind of systems would be the brain, that contains

numerous but nearly identical neurons interconnected by numerous

connecting elements such as synapses or ganglions; or consider a group

of people, each irregularly interacting with many others; or the words

of a language and their sequencing into sentences. This kind of

complexity is very common in nature. Analysis of any sizable system

exhibiting this property by using Logic is practically impossible, and

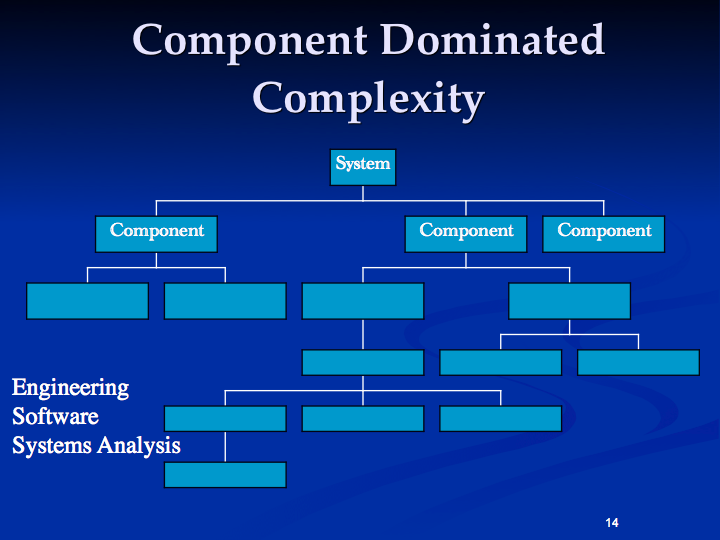

for some systems, may be impossible in principle. This is very different from systems exhibiting the more popular Component

Dominated Complexity where we try to keep the interfaces between the

components simple and well-defined and put all of the complexity inside

the components. Any component's function is determined by the specifics of the

component, and the components are designed to be distinct and of different

types. This is the kind of complexity we as humans can handle and that we strive

for (in order to manage the complexity) when building an opera house, an ocean

liner, or a large software system. This kind of complexity is common in human

artifacts, and provides little resistance to Logic-based modeling, analysis,

synthesis, or manipulation.

This is very different from systems exhibiting the more popular Component

Dominated Complexity where we try to keep the interfaces between the

components simple and well-defined and put all of the complexity inside

the components. Any component's function is determined by the specifics of the

component, and the components are designed to be distinct and of different

types. This is the kind of complexity we as humans can handle and that we strive

for (in order to manage the complexity) when building an opera house, an ocean

liner, or a large software system. This kind of complexity is common in human

artifacts, and provides little resistance to Logic-based modeling, analysis,

synthesis, or manipulation.-

This example uses a very simplified view of the behavior of neurons which ignores the spiking nature of the signaling

Systems containing components with non-linear responses (transfer functions). Neurons in brains, for example, use thresholding on the levels of input signals.

Systems with components that contain internal state; in other words, memory. Again, Neurons in brain provide an example of this behavior.

Systems that Require a Holistic Stance

I found three reasons a System might resist a "Reductionist" style analysis (i.e. analysis by breaking down the system into simpler parts that can then either be understood or broken down further). Such a system requires the opposite approach, named "Holistic" analysis, which means it has to be viewed as a unit while also taking into account the system's environment.

There are internal, external, and temporal reasons to adopt a Holistic stance.

This group of problem types was named "The Frame Problem" when discussed by John McCarthy and Patrick Hayes in 1969

The term "Holism" was coined by J. C. Smuts in 1946 and is still used quite frequently in Philosophy and Epistemology. A few decades ago the term "Holistic Medicine" became popular and denoted a view of Medical Science that incorporated the patient's environment (including diet, exposure to environmental toxins, stress levels, etc) into the analysis, which sounds like a good idea. Sadly, marketers of crystals, aromatherapy, and other nonsense adopted and abused the term "Holistic" to the point where it now has negative connotations to most people. I need to discuss the concept in the original Philosophical sense and I'm taking the word back.

-

The Curse of Dimensionality. Consider building a model of the world. The objects in the world, their properties, and the possible values for these properties are all potentially numerous. The number of possible types of objects and the number of possible interactions between properties of multiple objects increases catastrophically through a combinatorial explosion when the number of dimensions (distinct properties) increases. This causes problems in fields like Machine Learning and is a reason real-world systems resist analysis using Logical methods.

System cannot be separated from its environment. If you take a squirrel from its natural habitat and attempt to study it in the laboratory you will likely get a different result than if you study it in the field. Ecologies of plants and animals are so intertwined that there is no way to isolate any component without affecting the analysis. Blood in a test tube behaves different than blood circulating in a body.

Systems that never stop and have to keep adapting to a changing environment. We can certainly build chemical factories and oil refineries that operate every day around the clock. But most Scientists would be happiest if they could ask a specific question or do an experiment, and receive an answer that would not immediately be obsoleted by changing conditions. A system that is continuously changing is harder to understand analytically. Any static analysis will instantly become obsolete.

Systems that Contain Ambiguity

Here Ambiguity and its two variants are gathered with other similar problem types; Ambiguity, being the dominant problem type of this group, is used as a label for the group.

- Ambiguity

- Incomplete Information

- Incorrect Information

- Self-reference

- (Apparent) paradoxes

- Strange Loops

Douglas Hofstadter discusses these three problem types at length in his book "Gödel, Escher, Bach" and also in the recent "I Am a Strange Loop".Self-references and paradoxes are much discussed problems in Philosophy and Logic alike. A Strange Loop is a situation arising in type systems where you notice that A is a kind of B which is a kind of C which is a kind of A, closing the loop.

Gödel's Theorem states that any sufficiently powerful representational system can express paradoxes. If you attempt to build world models you will most likely run across these three kinds of issues. You had better design your system to handle these without looping forever, but looping is likely going to be the least of your worries caused by these problem types.

- Opinions

- Hypotheses

- Multiple Points of View

These three are again shades of each other. A hypothesis might be part of the input data but could also be a (typically temporary) assertion made by the system itself. Opinions are typically expressed one per agent. And one agent might simultaneously hold multiple persistent points of view on some issue, often depending on context.The same answer that was recommended for Ambiguity (Distributed Representation) works for these cases also. Note that Distributed Representation is used in AN systems; these issues remain as unresolved problems for Logic-based representational systems.

In the real world, Ambiguity is everywhere; Incomplete Information is a special

case of that, and Incorrect Information is the worst case. We are so good at

dealing with these three that we rarely notice how much of these there really

is. But if you try to build computer systems that deal with the real world you

will surely notice. In the field of Text Semantics, even short sentences contain

words with multiple meanings. Video images from real-world conditions are quite

difficult to analyze. Much of spoken and written communication is implied rather

than stated, and misinformation ranging from advertising and other persuasion to

outright untruths, malicious or not, are all around us.

Systems that model the world must deal with all these kinds of ambiguity. It is not sufficient to just "resolve it", to select one of the alternative interpretations, since doing so is a kind of sampling and the error, if done prematurely and badly, is likely to be unrecoverable.

A much better way is to design the model to allow for multiple possible interpretations to co-exist, in parallel, and to each allow analysis to progress along multiple paths until such a time that a selection of an alternative has significant support. "Distributed Representation" is one commonly used strategy that allows this. These systems are also resilient against incomplete and incorrect information. Handling parallel interpretations explicitly (by using other methods) may quickly become very complicated because of the curse of Dimensionality and the combinatorial explosion of dependencies.

But if these kinds of strategies are not adopted, then computer systems operating in ambiguous domains may display "brittle" behavior. When encountering ambiguous input, especially at the edges of the system's competence, the quality of the result will degrade rapidly. The old (in the computer world) saying "Garbage In, Garbage Out" is expressing this sentiment.

Systems that Exhibit Emergent Properties

A single water molecule floating in space does not have a temperature. "Temperature" is a way to statistically describe how much molecules move around relative to each other and is only defined for aggregates of molecules. We say that Temperature is a system level emergent property of aggregates of molecules.

Large numbers of water molecules behave differently based on their "Temperature". We have ice, water, and water vapor. The physical properties of water, such as slipperyness, wetness, and steam pressure, are system-level emergent properties and can not be observed when examining individual molecules.

Let's consider some other examples. The reliability of a system isn't located in any single component. Reliability is a system level emergent property. Other examples are concepts like the top speed of a car, the lifespan of a person, quality, beauty, and justice. Some people view the concept of emergent properties as vacuous or tautological, but it is a very useful abstraction.

Sometimes the system level emergent properties just provide a more useful point of view. When we use a computer to browse the web we can ignore at least a half-dozen layers of abstraction that hide the electrons moving around in semiconductors.

But often, "More is different". The system level properties can sometimes not be derived from the component properties because of irreducible complexity. How do we get life from mere lifeless atoms, or intelligence from unintelligent neurons?

The meaning (semantics) of a paragraph of text is not to be found in any single character or even any word. It is an emergent property of the sentence, the paragraph, the rules of language, the topic under discussion, and the shared world model of the writer and the reader.

Emergent properties cannot be analyzed by Reductionist methods precisely because the property "disappears" when we take the system apart to the next level of components.

Summary

The domain of Artificial Intelligence, in other words the domain of problems that require Intelligence, deals with Bizarre Systems. These are defined as those systems that contain at least one item from each of the four major problem types:

- Chaotic Systems

- Systems that require a Holistic stance

- Systems that contain (or otherwise have to deal with) ambiguity

- Systems that exhibit Emergent Properties

Sixteen common kinds of problems have been sorted into four corners corresponding to these four types in this diagram:

In important problem domains such as World Modeling, the Life

Sciences, and Semantics, all of these 16 problem types typically occur

together. All of these problem types resist Logic based approaches. Artificial

Intuition provides an alternative strategy to deal with these since it is

immune to all of them by virtue of not using Logic-based models; there is no

model there to get confused by these problems.